As an expert in telepresence and robotic teleoperation, the Awabot Intelligence team has been using WebRTC technology for many years, in the context of projects requiring remote robot control with video feedback. What is WebRTC? How does this open source technology work? Explanations.

What is WebRTC ?

Remember, in the early days of the Web, video conferencing tools such as Skype required their users to download a “heavy” application onto their computers. Little by little, uses have evolved towards greater simplicity: these same solutions have adapted by making themselves available directly from a web browser, almost “in one click”, thus becoming lighter for the user. This alternative is made possible thanks to WebRTC or “Web Real-Time Communication”.

This opensource tool is a programming interface allowing real-time communication including transport of video and audio streams in a web environment. It is now used by well-known video conferencing applications such as Google Meet, Discord and also by telepresence solutions such as, by chance, BEAM.

A project? Contact our WebRTC experts

How does WebRTC work?

The arrival of WebRTC enabled real-time communication of media streams in the browser which, until then, was clearly lacking on the Web. Indeed, previously, the possibilities for broadcasting video and audio streams in a browser were limited. There were then two possibilities:

- WebSockets, allowing persistent bi-directional data transport;

- the RTSP stream, favoring unidirectional video transport.

The WebRTC specification, compliant with the World Wide Web Consortium (W3C), has therefore established itself as a unified solution. This software component quite simply makes it possible to encode, transport, decode and synchronize peer-to-peer video and audio streams between two computers.

Preparing media content for transport using the codec

The codec, contraction of “coder-decoder”, makes it possible to represent a stream of video data in an optimized way.

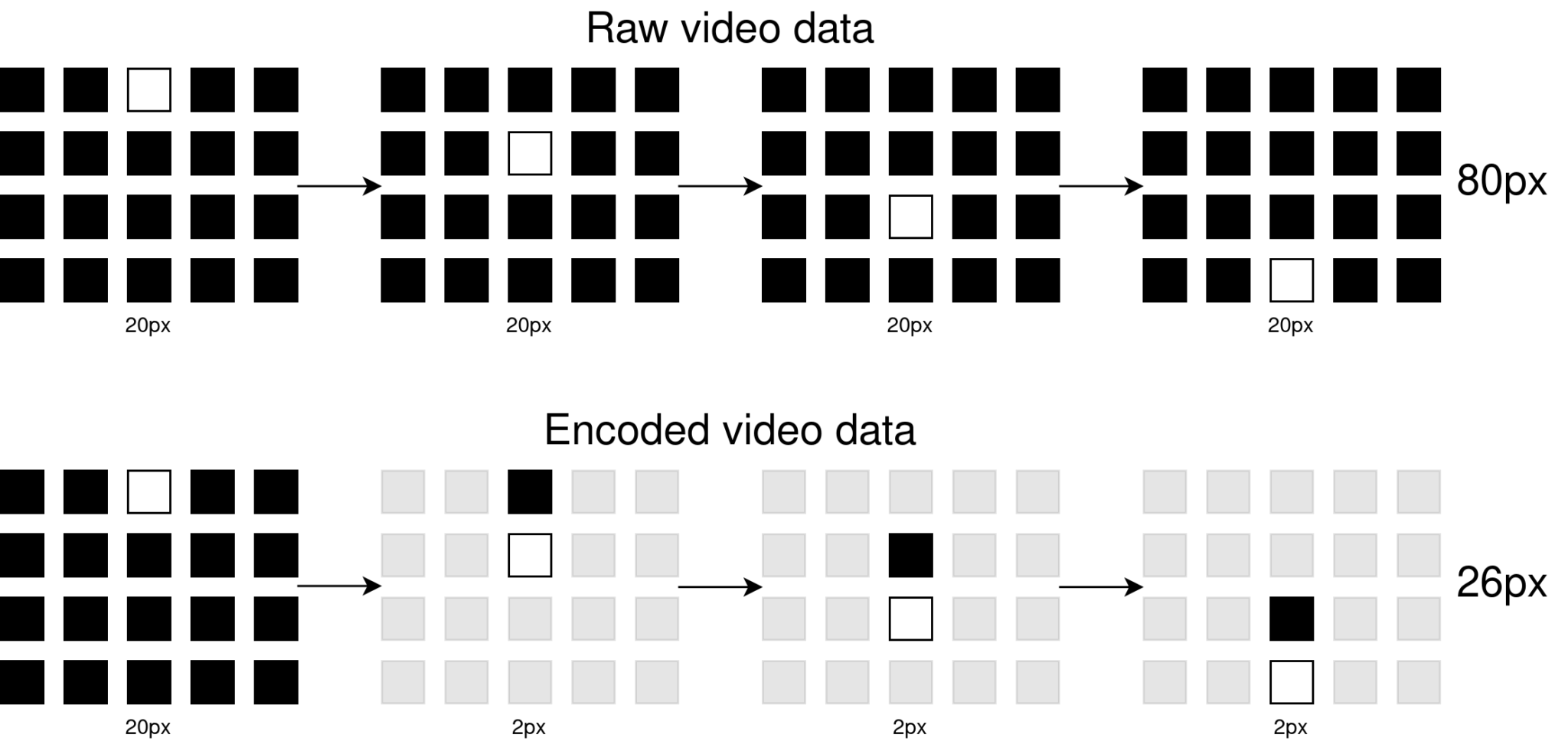

As a reminder, a video is nothing more than a succession of still images, having a certain bit rate, generally expressed in images per second. Unlike a raw video stream in which the entirety of each frame – each pixel – is recorded, an “encoded” video stream only contains the “useful” parts.

Most current codecs work the same way: a first full frame is sent, then the next “n” frames will only contain the differences from the previous frame.

Example: imagine a video showing a white dot moving on a black background. From one frame to another, the majority of the content of the frame does not change. Only one pixel changes from white to black and vice versa. The goal is therefore to record only this small variation instead of the entire frame.

To sum up, a codec aims to represent the information contained in a stream by optimizing it, even if it means reducing the video quality if this is necessary for its storage or in our case, for its transport.

Promote communication in real time and peer-to-peer

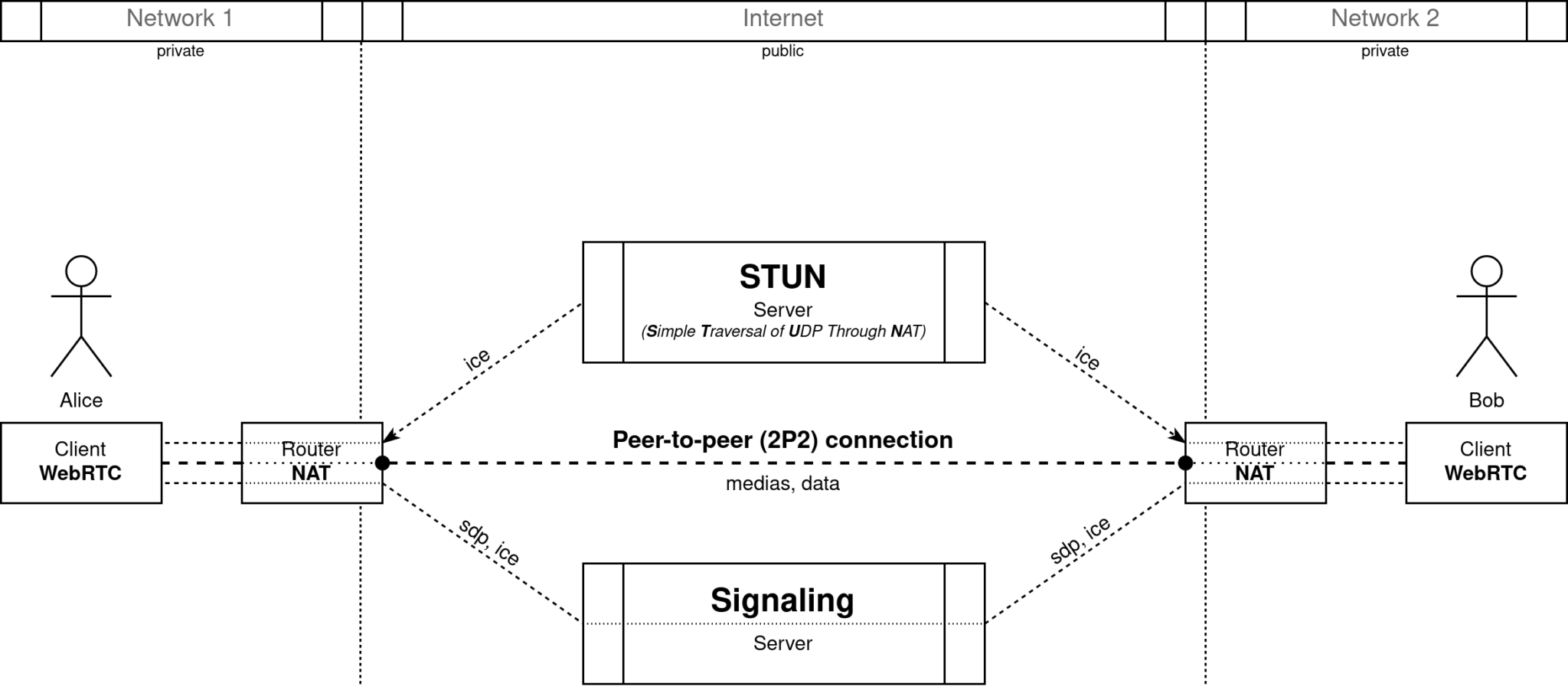

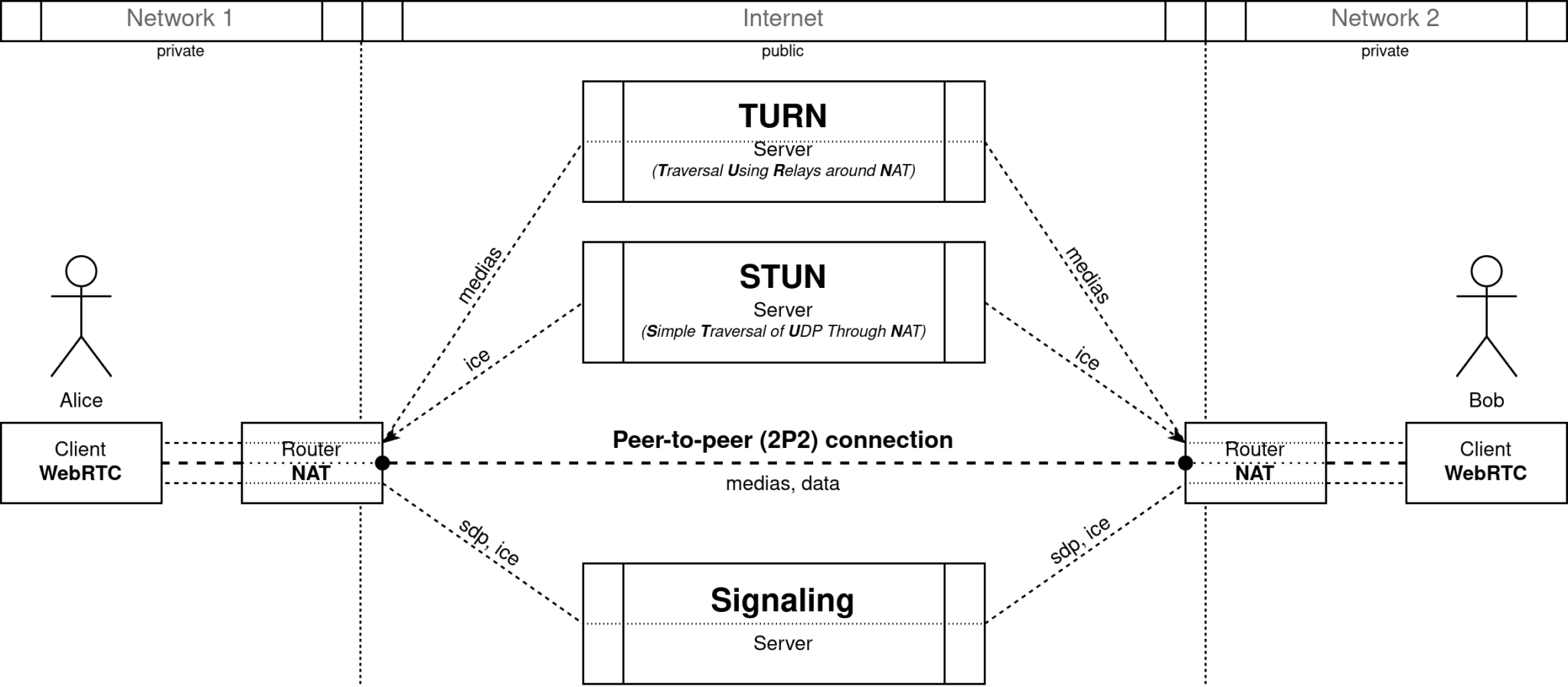

The problem of real-time communication on the Internet lies in the fact of identifying two individuals on the network, then connecting them in the most efficient way possible, in peer-to-peer (P2P).

NAT (Network Address Translation) is a public/private IP address matching process that can be implemented within routers. The goal is to ensure that several computers connected to a local network, having private IP addresses, can communicate with the outside, that is to say, use the Internet.

When using certain protocols, it is possible to use the NAT mechanism to establish a P2P communication between two remote computers. The principle is to use the configuration established by the NAT for an outgoing communication and to send “force” data (hole punching) so that it acts as an incoming communication channel.

However, the remote correspondent must have a certain amount of information necessary and useful for setting up hole punching. This is where the STUN (Session Traversal Utilities for NAT) server comes in, whose role is to provide this information, such as the public IP address, the type of NAT used, the output port used, etc. The aim will therefore be to transmit this information to the remote correspondent, thanks to the intervention of the signalling.

In the development of applications using WebRTC, signaling is the “free” part in terms of implementation. Thus, it is up to the developer to integrate his own solution, allowing the exchange of different information.

Two types of information must be exchanged:

- the SDP (Session Description protocol), describing the configuration in terms of media stream, serving as an invitation (and response to an invitation) between the two “WebRTC clients”;

- the ICE Candidates, containing the information collected from the STUN server.

Chronologically, the SDPs will be exchanged (invitation and response), then a multitude of ICE Candidates will be communicated in an attempt to agree and establish P2P communication. If this second step fails, a TURN server (Traversal Using Relays around NAT) will then take over: the flows will pass through the latter.

Un projet ? Contactez nos experts WebRTC

Other features of WebRTC technology

- It is also possible, from the moment the communication between the two “peers” is established (P2P or TURN), to open what is called a data channel.

This is a channel dedicated to the transport of any data such as the wheel steering commands of a remote robot, for example.

- WebRTC also has a system to cope with variations in network conditions over time and thus adapt the amount of data exchanged, in particular on audio and video streams. This is why it integrates the video encoding/decoding part itself.

For example, the variation over time of the video data rate is noticeable in the BEAM driver software: the quality of the video may be slightly lower when the robot is in motion, and improve when the robot stops. This is due to the configuration of the codecs: motion contains more information than no motion.

- Another particularity of WebRTC is that this brick makes it possible to synchronize the display and distribution of the different media streams over time.

For example, when using a telepresence robot, it is preferable that the top camera and the bottom camera are synchronized on the control interface. Otherwise, the user would have the impression that the head moves without the base or vice versa. It is the same for the sound, in particular for the lip synchronization, which allows to have a realistic rendering when a person speaks.

Do you have a project related to WebRTC technology? With complementary know-how and skills covering all issues related to service robotics, the Awabot Intelligence team supports you. Contact us.